Meet Reachy Mini: Building an AI-Powered Conference Badge Reader

How I built a fun conference booth experience combining an open-source robot, vi…

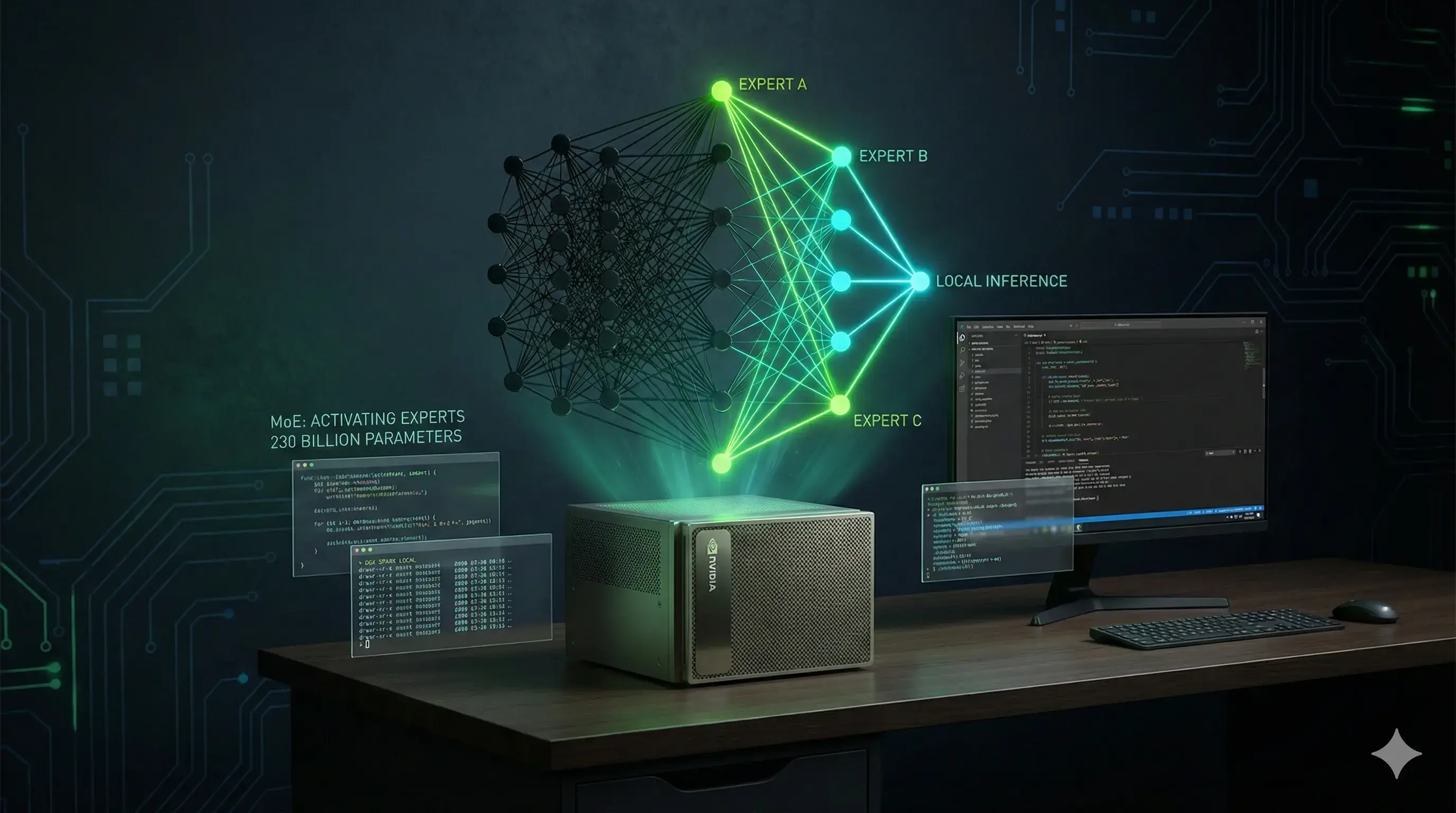

TL;DR: You can now run an open-source AI model that matches the coding performance of Claude and GPT on your desk, no cloud required. MiniMax M2.5, a 230B parameter model, achieves 80.2% on SWE-Bench Verified (on par with frontier APIs) and runs at ~26 tokens/sec on NVIDIA's DGX Spark using quantization and llama.cpp. This matters because pricing and subscriptions are subject to change, enterprise subscription plans may be going away, and some codebases simply can't leave the building. Local inference that's competitive with cloud APIs is no longer a compromise; it's becoming a viable default for sensitive or cost-conscious workloads.

In my previous post about the Reachy Mini conference badge app, I mentioned wanting to experiment with local LLMs using NVIDIA's DGX Spark to eliminate cloud API dependencies. That exploration led me down an interesting path that was triggered by a Slack message of Daniel Jones. So I started with MiniMax's M2.5 model, a 230B parameter beast that somehow runs smoothly on my desktop.

Don't get me wrong, I'm a huge fan and heavy user of Claude Code. But there a scenarios out there where the cloud dependency creates friction:

The DGX Spark sitting on my desk seemed like the perfect test bed for local inference at scale.

To put the local inference argument in perspective, here's what you're looking at with Claude Code on API billing today:

| Model | Input | Output |

|---|---|---|

| Opus 4.6 | $5.00 / 1M tokens | $25.00 / 1M tokens |

| Sonnet 4.5 | $3.00 / 1M tokens | $15.00 / 1M tokens |

| Haiku 4.5 | $1.00 / 1M tokens | $5.00 / 1M tokens |

According to Anthropic's own documentation, the average Claude Code cost is ~$6 per developer per day, with 90% of users staying below $12/day. For teams, that works out to roughly $100–200 per developer per month with Sonnet. With Opus, costs run significantly higher.

Subscription plans ($20 Pro, $100 Max 5x, $200 Max 20x) offer much better value than raw API billing. Community reports on r/ClaudeCode consistently confirm this: developers report burning through $10–40 of API credits in a single day, with one user estimating $800+ in equivalent API costs while on the $100 Max plan.

Subscriptions are the best bang for the buck right now, in agentic loops, they deliver up to 36x better value than API billing because cached token reads are effectively free on subscriptions, while the API charges 10% of input cost on every cache hit. The $100 Max 5x plan is the sweet spot, actually over-delivering on its advertised limits.

But that's exactly the problem: if these plans get restructured or dropped for enterprise users, you're back to API pricing overnight — and that's 5–10x more expensive. At ~€500/developer/month on API billing, the ~€4,500 investment in a DGX Spark pays for itself in nine months for a single developer and it only gets better from there. Local inference sidesteps all of this: after the hardware investment, the marginal cost per token is effectively zero. No rate limits, no weekly resets, no pricing surprises.

Mainly because Daniel triggered me to give it a try, but also that MiniMax M2.5 is the latest open model from MiniMax, and the benchmarks are remarkable. It achieves SOTA (state of the art) results for open models in coding, agentic tool use, and search tasks which are areas that matter most for development workflows.

The model uses a Mixture-of-Experts (MoE) architecture:

The MoE design is clever, you get the knowledge capacity of a 230B model with the inference speed of a 10B model. Only the relevant experts activate for each token, keeping compute manageable on my desk.

What caught my attention was how M2.5 stacks up against frontier models on coding and agentic tasks:

| Benchmark | MiniMax M2.5 | Claude Opus 4.5 | GPT-5.2 |

|---|---|---|---|

| SWE-Bench Verified | 80.2% | 80.9% | 80.0% |

| Multi-SWE-Bench | 51.3% | 50.0% | — |

| SWE-Bench Multilingual | 74.1% | 77.5% | 72.0% |

| BFCL multi-turn | 76.8% | 68.0% | — |

| BrowseComp | 76.3% | 67.8% | 65.8% |

| Terminal Bench 2 | 51.7% | 53.4% | 54.0% |

80.2% on SWE-Bench Verified puts it at SOTA for open models, essentially matching Claude and GPT-5. The 76.8% on BFCL multi-turn (tool calling) is particularly impressive - it outperforms Claude Opus 4.5's 68% on this benchmark.

For multi-repository changes (Multi-SWE-Bench), M2.5 scores 51.3% vs Claude's 50.0%. This matters for real-world codebases where changes span multiple repos.

The model also performs well on reasoning and search tasks:

| Benchmark | MiniMax M2.5 | Claude Opus 4.5 |

|---|---|---|

| AIME25 (Math) | 86.3 | 91.0 |

| GPQA-D (Science) | 85.2 | 87.0 |

| Wide Search | 70.3 | 76.2 |

| RISE | 50.2 | 50.5 |

Not quite Claude-level on pure reasoning, but close enough that the local inference benefits outweigh the gap for my use cases.

The DGX Spark has an unusual memory architecture that turns out to be perfect for large models:

| Component | Spec |

|---|---|

| GPU | NVIDIA GB10 Blackwell (sm_121) |

| Memory | 128GB unified (shared CPU/GPU) |

| CPU | 20 ARM64 Grace cores |

| CUDA | 13.0 |

The unified memory is key. Traditional setups struggle with the VRAM/RAM split - you're constantly optimizing which layers go where. Here, the full 128GB is GPU-accessible. The model just... fits.

The full bf16 model at 457GB obviously won't fit in 128GB. This is where Unsloth's quantization work becomes essential.

Unsloth provides GGUF (GPT-Generated Unified Format) versions using their Dynamic 2.0 approach. Instead of uniformly quantizing all layers, they keep important layers at higher precision (8 or 16-bit) while compressing less critical layers more aggressively. The result is 3-bit average with quality closer to 6-bit.

| Quant | Size | Reduction | Target Hardware |

|---|---|---|---|

| UD-Q3KXL | 101GB | -62% | 128GB (DGX Spark, M-series Mac) |

| Q8_0 | 243GB | -47% | 256GB systems |

| UD-Q2_K | ~80GB | -83% | 96GB devices |

I went with UD-Q3KXL. The model is split into 4 parts (~25GB each), and llama.cpp handles the multi-file loading automatically.

My benchmarks on DGX Spark show solid results: ~26 tokens/sec decode (token generation) on average, with prefill speeds peaking at 473 tok/s for prompt ingestion. The decode rate stays remarkably consistent across different prompt lengths, only dropping slightly from ~27 tok/s at short prompts to ~24 tok/s at 4K+ tokens.

The GB10 requires specific build flags that aren't in standard llama.cpp releases yet. I created a Dockerfile that handles this:

RUN cmake -B build \

-DGGML_CUDA=ON \

-DGGML_CUDA_FA_ALL_QUANTS=ON \

-DCMAKE_CUDA_ARCHITECTURES="121" \

-DGGML_CPU_AARCH64=ON \

-DBUILD_SHARED_LIBS=OFF \

&& cmake --build build -j$(nproc) --target llama-server

The important flags:

CMAKE_CUDA_ARCHITECTURES=121 targets Blackwell specificallyGGML_CPU_AARCH64=ON enables ARM64 NEON/SVE optimizations for the Grace coresGGML_CUDA_FA_ALL_QUANTS=ON enables Flash Attention for quantized modelsBUILD_SHARED_LIBS=OFF for static linking (per Unsloth's recommendation)MiniMax specifies exact sampling parameters - and they're different from typical defaults:

- "--temp"

- "1.0" # Higher than typical

- "--top-p"

- "0.95"

- "--top-k"

- "40"

- "--min-p"

- "0.01" # Lower than default 0.05

- "--repeat-penalty"

- "1.0" # Disabled

The temperature of 1.0 feels high, but it's what the model was trained for. MiniMax explicitly recommends these settings for best performance.

Other critical flags for DGX Spark:

- "-ngl"

- "999" # All layers on GPU

- "-fa"

- "on" # Flash Attention

- "-c"

- "131072" # 128K context

- "--no-mmap" # Critical for unified memory

The --no-mmap flag is essential. Without it, the unified memory system triggers constant page faults and performance drops to a crawl. This took me longer to figure out than I'd like to admit.

The full setup can be found at github.com/re-cinq/minimax-m2.5-nvidia-dgx. It includes the Dockerfile, docker-compose configuration, custom chat template, benchmark script, and agent configuration. Clone the repo and you're three commands away:

# 1. Clone the repo

git clone https://github.com/re-cinq/minimax-m2.5-nvidia-dgx.git

cd minimax-m2.5-nvidia-dgx

# 2. Download model (~101GB, 4 parts)

huggingface-cli download unsloth/MiniMax-M2.5-GGUF \

--local-dir ./models --include '*UD-Q3_K_XL*'

# 3. Build and start

cd docker

docker compose build # First time only

docker compose up -d

Model loading might take up to 5 minutes, because it will load 101GB into RAM. You can follow the progress with docker compose logs -f. Once ready, you have an OpenAI-compatible endpoint at localhost:8080/v1:

# Quick health check

curl http://localhost:8080/health

# Test a completion

curl http://localhost:8080/v1/chat/completions \

-H "Content-Type: application/json" \

-d '{"model": "minimax-m2.5", "messages": [{"role": "user", "content": "Hello"}]}'

Or use it from Python with any OpenAI-compatible client:

from openai import OpenAI

client = OpenAI(

base_url="http://localhost:8080/v1",

api_key="not-needed"

)

response = client.chat.completions.create(

model="minimax-m2.5",

messages=[{"role": "user", "content": "Write a Python async task queue"}]

)

The repo also includes a benchmark.sh script to verify your setup is performing as expected, and a config/ directory with agent configurations if you want to use this with agentic coding tools.

On DGX Spark with UD-Q3KXL:

The decode speed stays consistent regardless of prompt length, which is exactly what you want for interactive use. The 3-bit quant is actually faster than Q6_K would be, less memory bandwidth required. The quality difference on coding tasks is negligible in my testing.

Unified memory changes the game. The traditional dance of offloading layers between VRAM and RAM disappears. The model lives in one place and the GPU accesses it directly.

mmap is the enemy on unified memory. The kernel's memory-mapped file handling doesn't play well with unified architectures. Force the model to load directly with --no-mmap.

MoE efficiency. 230B parameters sounds massive, but with only 10B active, generation speed is comparable to much smaller models. You're getting the knowledge of a large model with the speed of a small one.

Dynamic quantization FTW. Unsloth's approach of preserving precision in important layers means 3-bit performs like 6-bit on tasks that matter.

Open models have caught up. 80.2% on SWE-Bench Verified, 76.8% on BFCL, these numbers match or exceed frontier APIs on the benchmarks but I mainly care about real coding workflows.

This gives me what I wanted: a local, private inference endpoint that's OpenAI-compatible and competitive with cloud APIs on coding tasks. The setup is open source if you want to try it yourself.

The next step is to use this as a backend for Claude Code. Since the endpoint is OpenAI-compatible, it should fit right in. I've already set up the full Claude Code environment on the DGX Spark with custom skills, team configurations, and slash commands - everything needed to run agentic coding workflows entirely on local hardware. That's a post for another day.

The broader takeaway is that the gap between open and closed models is shrinking fast. A year ago, running something competitive with frontier APIs on desktop hardware would have been unthinkable. Now it's a Docker Compose file and a bit of tinkering. This setup will be quite usefull to analyse sensitve codebases.

How I built a fun conference booth experience combining an open-source robot, vi…

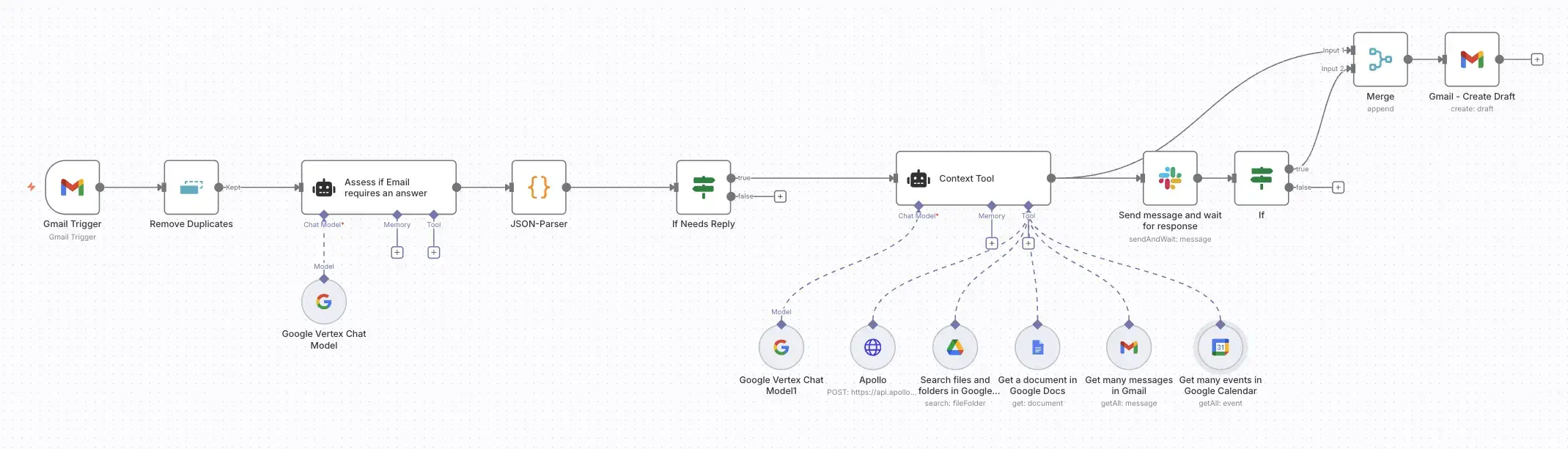

Build an AI-powered email assistant that gathers context from your calendar, doc…

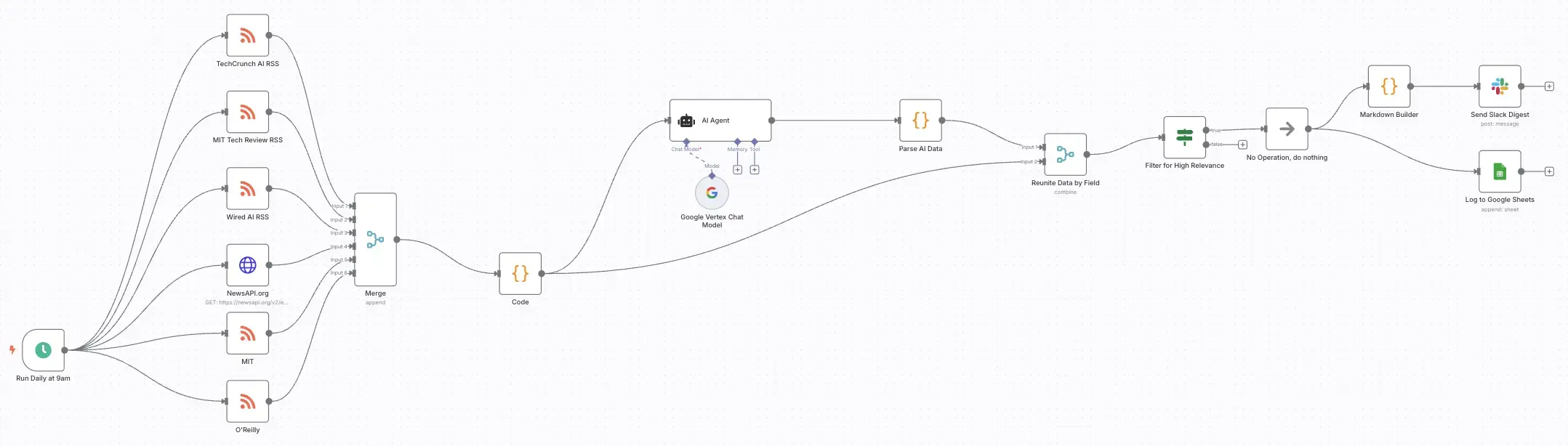

Build an automated workflow gathering AI news from multiple sources. Use LLMs to…

Get a shared vocabulary of proven Transformation Patterns, common Anti-Patterns, and Paradigm Patterns to have more effective, data-driven conversations about your strategy and architecture.

For a personalized starting point, take our free online assessment. Your results will give you a detailed report on your current maturity and suggest the most relevant patterns to focus on first.

Every Tuesday, we deliver one short, powerful read on AI Native to help you lead better, adapt faster, and build smarter—based on decades of experience helping teams transform for real.