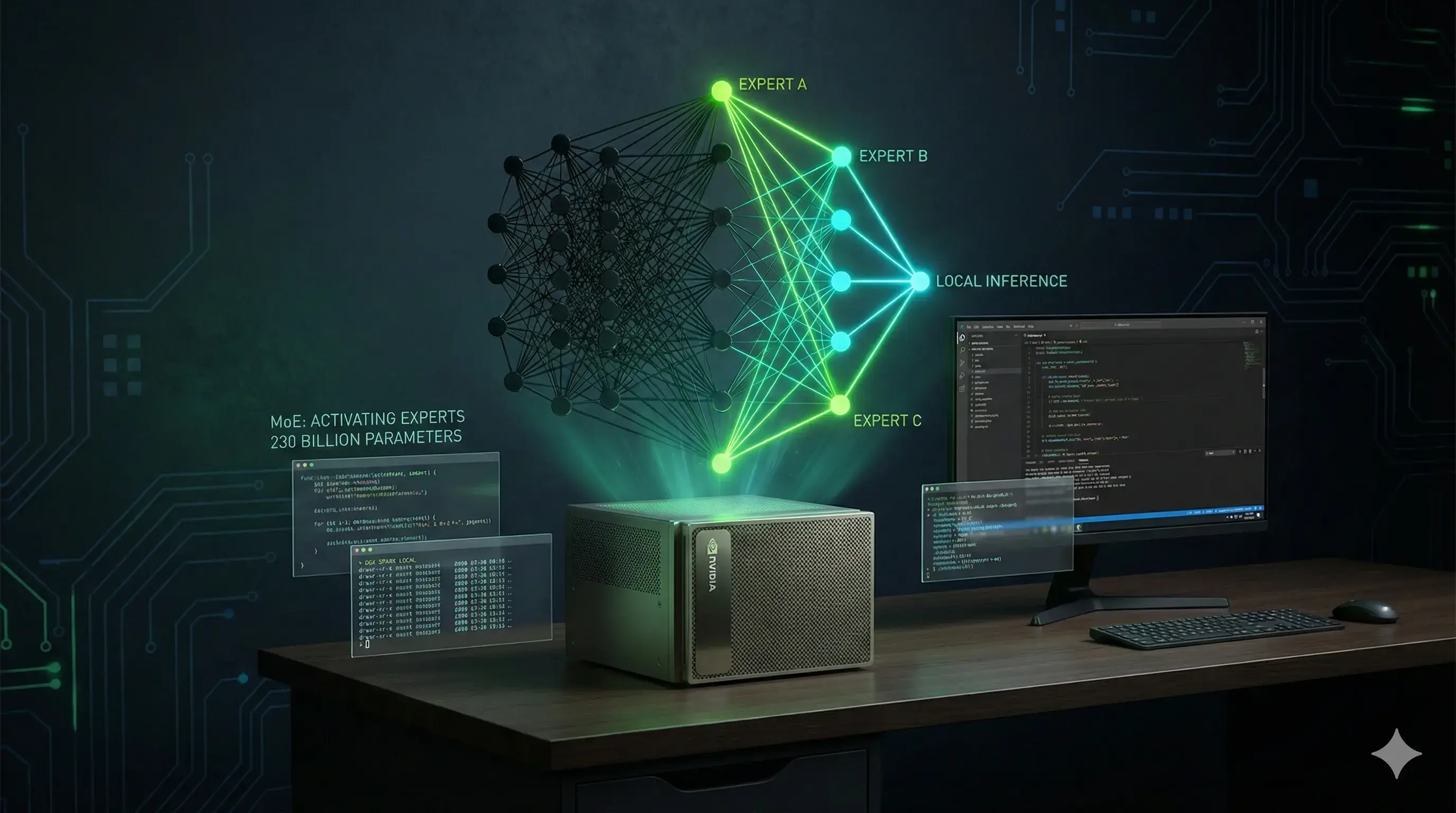

Running MiniMax M2.5 Locally on NVIDIA DGX Spark

How I got a 230B parameter open model running on desktop hardware using NVIDIA D…

I recently got my hands on a Reachy Mini from Pollen Robotics, and I have to say—it's been one of the most enjoyable pieces of technology I've worked with in a while. The assembly happened over Christmas, which turned into an unexpected family activity. My kids were eager to help with the build, and watching their excitement as the robot came together piece by piece was fun. There's something uniquely satisfying showing your kids how code translates into actual movement and personality.

To put it through its paces, I built a conference booth application that reads attendee badges and finds their LinkedIn profiles, of course GDPR compliant with consent to use the gathered picture, recognising a thumbs up by the person. The result? A fun, interactive experience that genuinely engages people at a conference booth.

Reachy Mini is a small desktop robot developed by Pollen Robotics, recently acquired by HuggingFace, focused on open-source robots.

What makes Reachy Mini interesting:

Hugging Face's acquisition of Pollen Robotics (announced April 2025) aims to merge advanced open-source AI with physical hardware, with the Reachy Mini serving as the flagship "embodied AI" platform.

The Reachy Mini is unique and superior to previous Pollen products (like the full-sized Reachy 2) primarily due to its accessibility, cost, and deep integration with the Hugging Face AI ecosystem.

The robot connects via USB-C or WiFi, with a daemon that exposes a REST API and WebSocket interface. This architecture means you can run your AI workloads on a powerful machine while the robot handles the physical interaction.

The idea was simple: create an engaging booth experience where Reachy Mini reads conference badges and finds attendees on LinkedIn. The flow looks like this:

1. Attendee approaches → Robot looks at them, wiggles antennas excitedly

2. VLM reads badge → Display shows "Hi [Name]! Give me a thumbs up!"

3. Thumbs up detected → Robot "thinks", searches LinkedIn

4. Profile found → Celebration! Shows LinkedIn profile on TV

5. Not found → Friendly shrug and welcome message

The application combines several AI and robotics technologies:

Robot Control (reachy-mini SDK)

from reachy_mini import ReachyMini

from reachy_mini.utils import create_head_pose

import numpy as np

mini = ReachyMini()

mini.enable_motors()

# Look forward and wiggle antennas

mini.goto_target(

head=create_head_pose(pitch=-5),

duration=0.5

)

for _ in range(3):

mini.goto_target(antennas=np.deg2rad([35, -35]), duration=0.12)

mini.goto_target(antennas=np.deg2rad([-35, 35]), duration=0.12)

Vision Processing (MediaPipe)

Badge Reading (Claude Vision)

message = client.messages.create(

model="claude-3-5-sonnet-20241022",

messages=[{

"role": "user",

"content": [

{"type": "image", "source": {"type": "base64", "data": image_b64}},

{"type": "text", "text": BADGE_PROMPT}

]

}]

)

LinkedIn Search (Google Custom Search API)

linkedin.com/in/*Display UI (FastAPI + WebSockets)

One of the most enjoyable parts was programming the robot's personality. The SDK makes it straightforward to create expressive animations:

async def celebration(self):

"""Excited reaction when LinkedIn profile found."""

# Quick happy nods

for _ in range(2):

self.mini.goto_target(head=create_head_pose(pitch=15), duration=0.18)

await asyncio.sleep(0.18)

self.mini.goto_target(head=create_head_pose(pitch=-8), duration=0.18)

await asyncio.sleep(0.18)

# Antenna dance party

for _ in range(4):

self.mini.goto_target(antennas=np.deg2rad([50, -50]), duration=0.1)

await asyncio.sleep(0.1)

self.mini.goto_target(antennas=np.deg2rad([-50, 50]), duration=0.1)

await asyncio.sleep(0.1)

There's also a "thinking" animation when searching (head tilt with slow antenna waves), and a friendly shrug when the profile isn't found. These small touches make a huge difference in how people interact with the robot.

Pollen Robotics has made both the hardware CAD files and software fully open source. This matters for several reasons. The goal is to make robotics as accessible as AI software development, removing the "closed-system" bottleneck.

The reachy_mini repository includes everything from the Python SDK to example applications and even integration with Hugging Face Spaces for app discovery.

The current implementation uses Claude's Vision API for badge reading—it works well and handles the OCR task reliably. But running everything through cloud APIs has drawbacks: latency, costs, and dependency on external services.

For the next iteration, I want to experiment with local LLMs using NVIDIA DGX Spark.

The goal would be a fully self-contained system: no cloud dependencies, faster response times, and the ability to run anywhere without internet connectivity.

There's something quite satisfying about robotics in combination with AI. It doesn't just process data but creates physical presence and personality.

Reachy Mini hits a sweet spot: accessible enough for weekend projects, capable enough for "real applications", and open to learn from and build upon. If you're interested in robotics, AI, or just want to build something fun, I'd encourage you to check it out.

And if you see Reachy Mini at a conference booth, give it a thumbs up. It'll be happy to find your LinkedIn profile (Next at ContainerDays London).

How I got a 230B parameter open model running on desktop hardware using NVIDIA D…

A quick how-to guide for connecting Claude Code to your local MiniMax M2.5 infer…

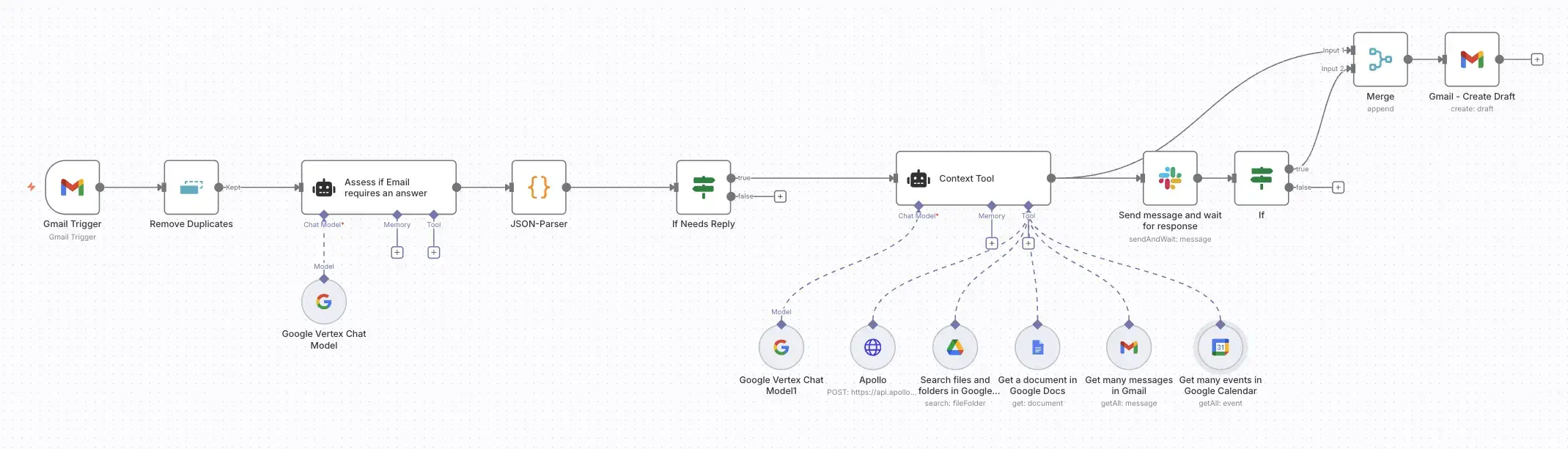

Build an AI-powered email assistant that gathers context from your calendar, doc…

Get a shared vocabulary of proven Transformation Patterns, common Anti-Patterns, and Paradigm Patterns to have more effective, data-driven conversations about your strategy and architecture.

For a personalized starting point, take our free online assessment. Your results will give you a detailed report on your current maturity and suggest the most relevant patterns to focus on first.

Every Tuesday, we deliver one short, powerful read on AI Native to help you lead better, adapt faster, and build smarter—based on decades of experience helping teams transform for real.