The Evolving Landscape of Large Language Model (LLM) Architectures

Discover the evolving landscape of Large Language Model (LLM) architectures and…

Large Language Models (LLMs) have changed the field of natural language processing (NLP), powering applications from chatbots and virtual assistants to machine translation and content generation. Models like OpenAI's GPT-4, Anthropic's Claude 3.5 Sonnet or Google' Gemini 1.5 have demonstrated remarkable results in generating human-like text, performing complex reasoning, and even passing professional exams. But what exactly are LLMs, and what are the key concepts that underpin their functionality?

In this blog post, we'll explore what Large Language Models are and explain essential terminologies associated with them.

Large Language Models are advanced neural networks trained on huge amounts of textual data to understand, generate, and manipulate human language. They are characterized by their large number of parameters—often billions or even trillions—which enable them to capture complex patterns, syntax, and semantics in language.

LLMs are designed to predict the next word in a sentence, a simple objective that leads to capabilities in language understanding and generation. Through extensive training, these models learn the probability distribution of word sequences, allowing them to generate coherent and contextually relevant text.

Key Characteristics of LLMs:

At the heart of LLMs lies a fundamental task: predicting the next word in a sequence given the preceding words. This seemingly simple task enables the model to learn the statistical properties of language, capturing both short-term and long-term dependencies.

Emergent Abilities:

As LLMs scale in size and data, they show capabilities in unexpected areas where these models haven't been trained for. Examples include:

These abilities emerge because the models learn complex patterns and relationships in the data, enabling them to generalize knowledge across different tasks.

Understanding the following key concepts is essential to grasp how LLMs function.

Language modeling involves learning the probability distribution over sequences of words. By predicting the likelihood of a word given the preceding words, LLMs can generate coherent text.

Example:

Tokenization is the process of breaking down text into smaller units called tokens. Tokens can be sentencesm words, subwords, or characters. Effective tokenization is crucial for model performance.

Consider the following sentence:

"She exclaimed, "I'll never forget the well-known author's life's work!"

1. Whitespace Tokenization:

["She", "exclaimed,", ""I'll", "never", "forget", "the", "well-known", "author's", "life's", "work!"]

2. Punctuation-Based Tokenization:

["She", "exclaimed", ",", "\"", "I", "'ll", "never", "forget", "the", "well", "-", "known", "author", "'s", "life", "'s", "work", "!", "\""]

3. Subword Tokenization (Byte-Pair Encoding):

["She", "ex", "claim", "ed", ",", "\"", "I", "'ll", "never", "for", "get", "the", "well", "-", "known", "author", "'s", "life", "'s", "work", "!", "\""]

Explanation: Breaks down words into smaller subword units. This is beneficial for handling rare or complex words by decomposing them into familiar components.

Benefits of Subword Tokenization:

Embeddings convert tokens into numerical vectors that capture semantic meaning. Similar words have embeddings that are close in the vector space.

LLMs generate text in an autoregressive manner, predicting one token at a time based on previous tokens. They output a probability distribution over possible next tokens, selecting one according to certain strategies (e.g., greedy search, beam search, sampling).

Context size refers to the maximum number of tokens the model can process in a single input. Larger context sizes allow the model to consider more preceding text, leading to more coherent and contextually appropriate outputs.

Scaling laws describe how changes in model size, data size, and computational resources affect performance. Research (e.g., the Chinchilla paper) has shown that there are optimal balances between these factors.

Key Points:

Optimal Training: The Chinchilla authors trained hundreds of models and reported an optimal token-to-parameter ratio (TPR) of roughly 20. For a model with ( N ) parameters, it should be trained on approximately ( N * 20 ) tokens.

Emergent abilities are skills that appear in LLMs as they scale, without explicit development of this capabilities.

These abilities highlight the potential of LLMs to generalize knowledge and perform complex tasks.

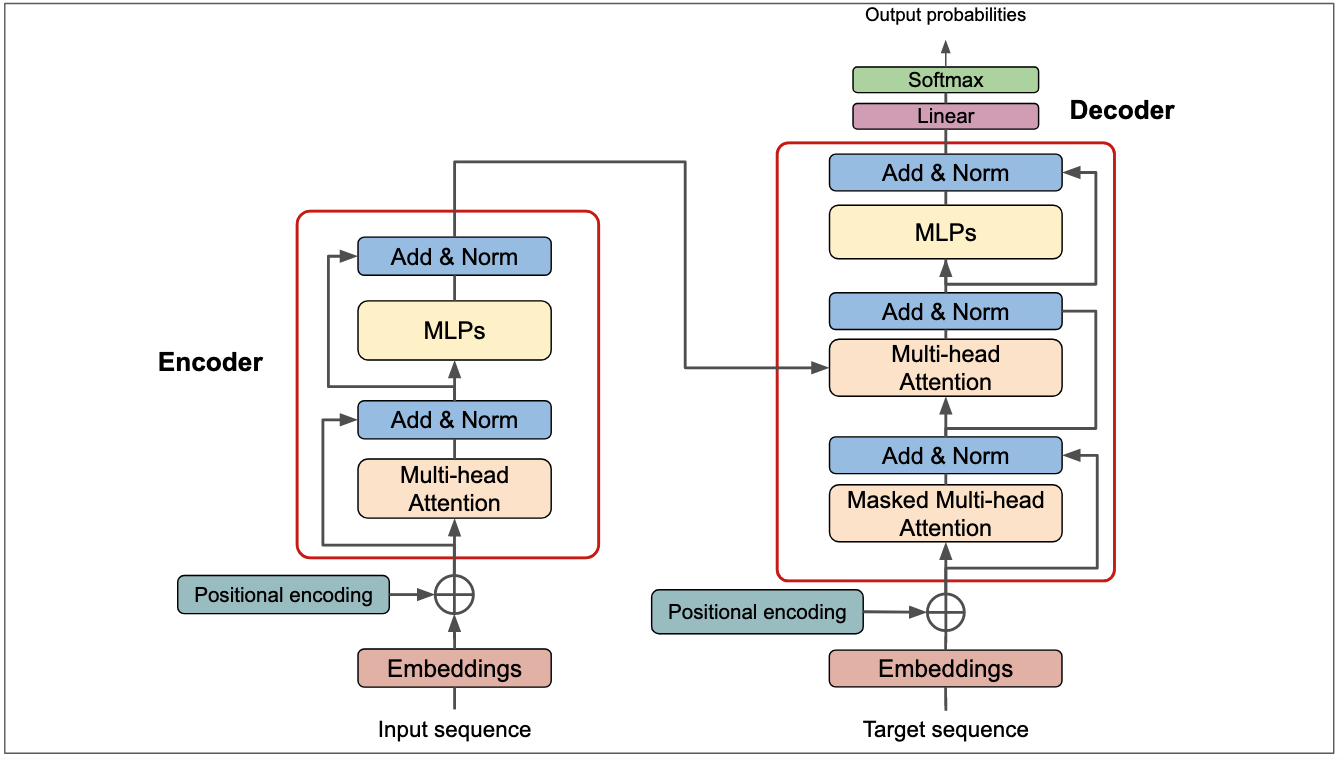

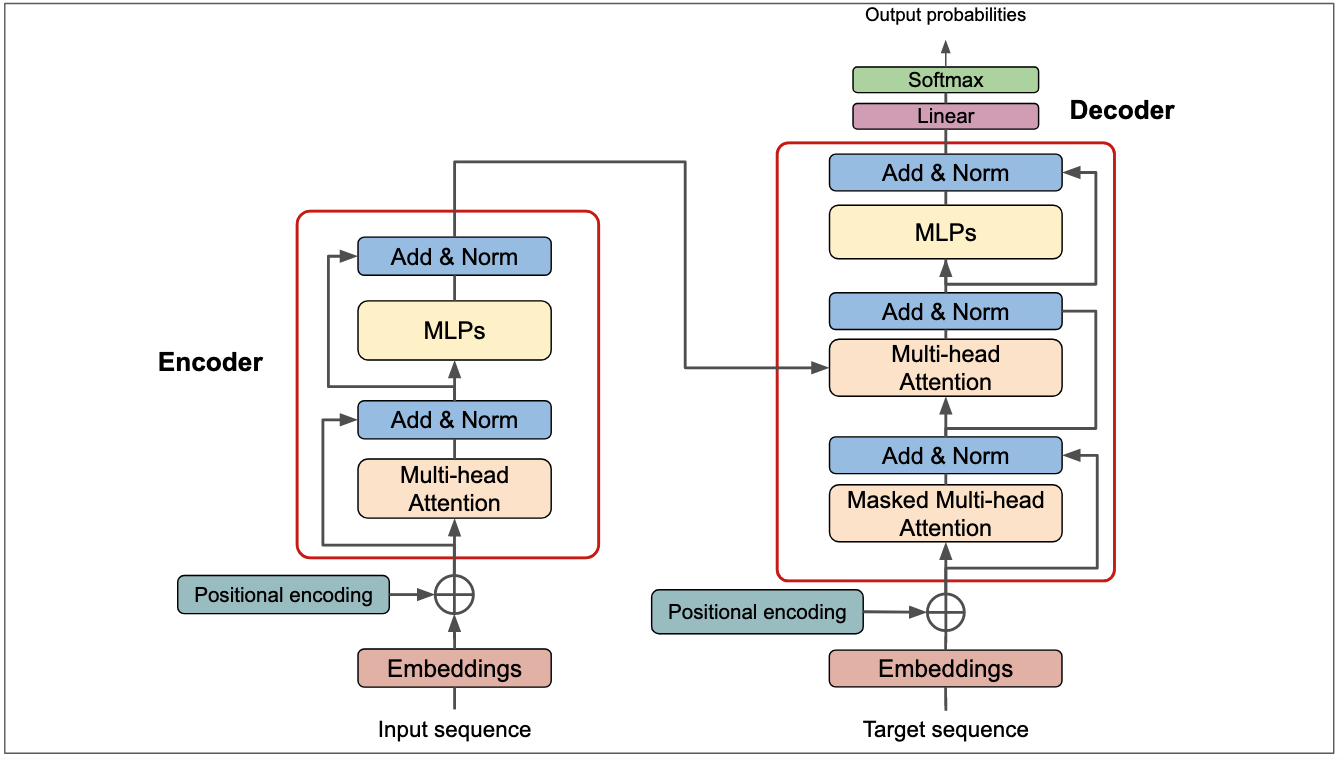

Large Language Models represent a significant advancement in AI and NLP, with capabilities in understanding and generating human language. By leveraging architectures like transformers and concepts like tokenization and embeddings, LLMs can perform a wide array of tasks, often without task-specific training.

Understanding the key terminologies and concepts behind LLMs is essential for anyone interested in AI, as these models continue to influence technology. As research progresses, we can expect LLMs to become even more capable, opening up new possibilities and challenges in the field of artificial intelligence.

Discover the evolving landscape of Large Language Model (LLM) architectures and…

Step-by-step guide: Deploy Llama and DeepSeek LLMs using SGLang on DataCrunch, a…

Discover how AI Engineering adapts Platform Engineering principles to meet AI ch…

Get a shared vocabulary of proven Transformation Patterns, common Anti-Patterns, and Paradigm Patterns to have more effective, data-driven conversations about your strategy and architecture.

For a personalized starting point, take our free online assessment. Your results will give you a detailed report on your current maturity and suggest the most relevant patterns to focus on first.

Every Tuesday, we deliver one short, powerful read on AI Native to help you lead better, adapt faster, and build smarter—based on decades of experience helping teams transform for real.