The Evolving Landscape of Large Language Model (LLM) Architectures

Discover the evolving landscape of Large Language Model (LLM) architectures and…

This tutorial guides you through deploying Llama and DeepSeek using SGLang, a powerful library for efficient LLM serving and integrate with LibreChat for seamless conversations. We'll deploy two popular models: deepseek-ai/deepseek-llm-7b-chat and meta-llama/Llama-3.1-8B-Instruct. The process is adaptable for any model supported by SGLang. For detailed model information, refer to the supported models section.

Before starting, you'll need:

SGLang seamlessly fetches model weights directly from the Hugging Face Model Hub. This tutorial uses:

deepseek-ai/deepseek-llm-7b-chatmeta-llama/Llama-3.1-8B-InstructImportant:

Log in to DataCrunch: Access your DataCrunch cloud dashboard.

Navigate to your Project: Open an existing project or create a new one.

Create New Deployment:

deepseek-sglang).Container Image Configuration:

docker.io/lmsysorg/sglang:v0.2.13-cu124 (or choose your preferred version from SGLang Docker Hub).Port Configuration:

3000030000/healthStart Command Configuration:

python3 -m sglang.launch_server --model-path deepseek-ai/deepseek-llm-7b-chat --host 0.0.0.0 --port 30000

python3 -m sglang.launch_server: Executes the SGLang server script.--model-path deepseek-ai/deepseek-llm-7b-chat: Specifies the Hugging Face model to load. To deploy Llama 3, you would change this to meta-llama/Llama-3.1-8B-Instruct.--host 0.0.0.0: Makes the server accessible from outside the container.--port 30000: Sets the server port to 30000, matching the exposed port.Environment Variables:

HF_TOKEN.Deploy! Click "Deploy container".

DataCrunch will now pull the SGLang image, download the model weights from Hugging Face, and start the SGLang container. This process can take several minutes depending on the model size, so please be patient.

Monitoring Deployment:

https://containers.datacrunch.io/<YOUR-DEPLOYMENT-NAME>/.curlVerify your deployment is working using a get_model_info request. This checks if the SGLang server is running and serving the correct model information.

Open your terminal and run the following curl command, replacing placeholders with your actual values:

curl -X GET https://<YOUR_CONTAINERS_API_URL>/get_model_info \

--header 'Authorization: Bearer <YOUR_INFERENCE_API_KEY>' \

--header 'Content-Type: application/json'

Expected Successful Response:

{

"model_path": "deepseek-ai/deepseek-llm-7b-chat",

"tokenizer_path": "deepseek-ai/deepseek-llm-7b-chat",

"is_generation": true

}

If you see this response, your SGLang endpoint is deployed and working correctly!

To deploy a different SGLang-compatible model, simply repeat steps 3-8 in the "Deployment Steps on DataCrunch" section, changing only the --model-path in the Start Command.

For example, to deploy Llama 3, use:

python3 -m sglang.launch_server --model-path meta-llama/Llama-3.1-8B-Instruct --host 0.0.0.0 --port 30000

Let's connect LibreChat, a user-friendly chat interface. We'll use a local Podman setup for this example. Docker Compose (or Podman Compose) is the easiest way to run LibreChat.

Prerequisites:

Installation Steps:

git clone https://github.com/danny-avila/LibreChat.git

cd LibreChat

cp .env.example .env

cp librechat.example.yaml librechat.yaml

Configure librechat.yaml for DataCrunch SGLang Endpoint:

Open librechat.yaml and add the following custom section. Crucially, ensure baseURL points to your DataCrunch endpoint's base URL followed by /v1:

custom:

- name: "DeepSeek" # Name as it appears in LibreChat

apiKey: "<YOUR_INFERENCE_API_KEY>" # Your DataCrunch Inference API Key

baseURL: "https://<YOUR_CONTAINERS_API_URL>/v1/chat/completions # **Corrected baseURL for SGLang**

models:

default: [

"deepseek-llm-7b-chat", # Model name as used in SGLang/Hugging Face

]

fetch: false

titleConvo: true

titleModel: "current_model"

summarize: false

summaryModel: "current_model"

forcePrompt: false

modelDisplayLabel: "DeepSeek on DataCrunch" # Display label in LibreChat

- name: "Llama 3" # Another custom model configuration

apiKey: "<YOUR_INFERENCE_API_KEY>"

baseURL: "https://<YOUR_CONTAINERS_API_URL>/v1/chat/completions" # **Correct baseURL for SGLang**

models:

default: [

"Llama-3.1-8B-Instruct", # Model name as used in SGLang/Hugging Face

]

fetch: false

titleConvo: true

titleModel: "current_model"

summarize: false

summaryModel: "current_model"

forcePrompt: false

modelDisplayLabel: "Llama 3 on DataCrunch" # Display label in LibreChat

Modify api service volumes in docker-compose.yaml:

In the docker-compose.yaml, find the api service definition. Within the api service, locate the volumes section and extend it to include a bind mount for your librechat.yaml file. It should look like this (add the - type: bind section):

services:

api:

# ... other api service configurations ...s

volumes:

# ... existing volumes ...

- type: bind

source: ./librechat.yaml # Path to your librechat.yaml file

target: /app/librechat.yaml # Path inside the container where LibreChat expects it

# ... more volumes ...

Remove extra_hosts for Podman (if using Podman):

If you are using Podman (especially on macOS or Windows), find the api service in your compose file and remove the extra_hosts section entirely. extra_hosts is Docker Desktop specific and not needed with Podman.

services:

api:

# ... other api service configurations ...

# REMOVE THIS ENTIRE SECTION IF USING PODMAN:

# extra_hosts:

# - 'host.docker.internal:host-gateway'

# ... rest of api service configurations ...

Start LibreChat with Podman Compose:

podman compose up -d

🎉 Access LibreChat!

Open your web browser and go to http://localhost:3080/. You should now see the LibreChat interface. In the model selection dropdown, you will find "DeepSeek" and "Llama". Select one and start chatting!

Enjoy interacting with your deployed LLMs through LibreChat!

Troubleshooting Tips:

HF_TOKEN environment variable is correctly set and valid.baseURL in librechat.yaml: Double-check that baseURL is https://<YOUR_CONTAINERS_API_URL>/v1/chat/completions.librechat.yaml.api container (using podman logs -f <api_container_name>) for connection errors.By following these steps, you've successfully deployed LLMs using SGLang on DataCrunch and connected them to LibreChat, creating a powerful and private AI chat environment!

Ready to Take the Next Step and Build Your Own LLM?

This tutorial showed you how to deploy pre-trained LLMs using SGLang and DataCrunch manually. But what if you want to go further and create a custom LLM tailored to your specific needs? DataCrunch provides the robust infrastructure and scalable compute resources necessary to train your own Large Language Models from scratch, or fine-tune existing models with your proprietary data.

If you're interested in exploring the possibilities of building and training your own LLMs, we're here to help! Reach out to our team today to discuss your project, explore available resources, and discover how DataCrunch can empower you to create cutting-edge AI solutions. Let us help you bring your unique LLM vision to life!

Discover the evolving landscape of Large Language Model (LLM) architectures and…

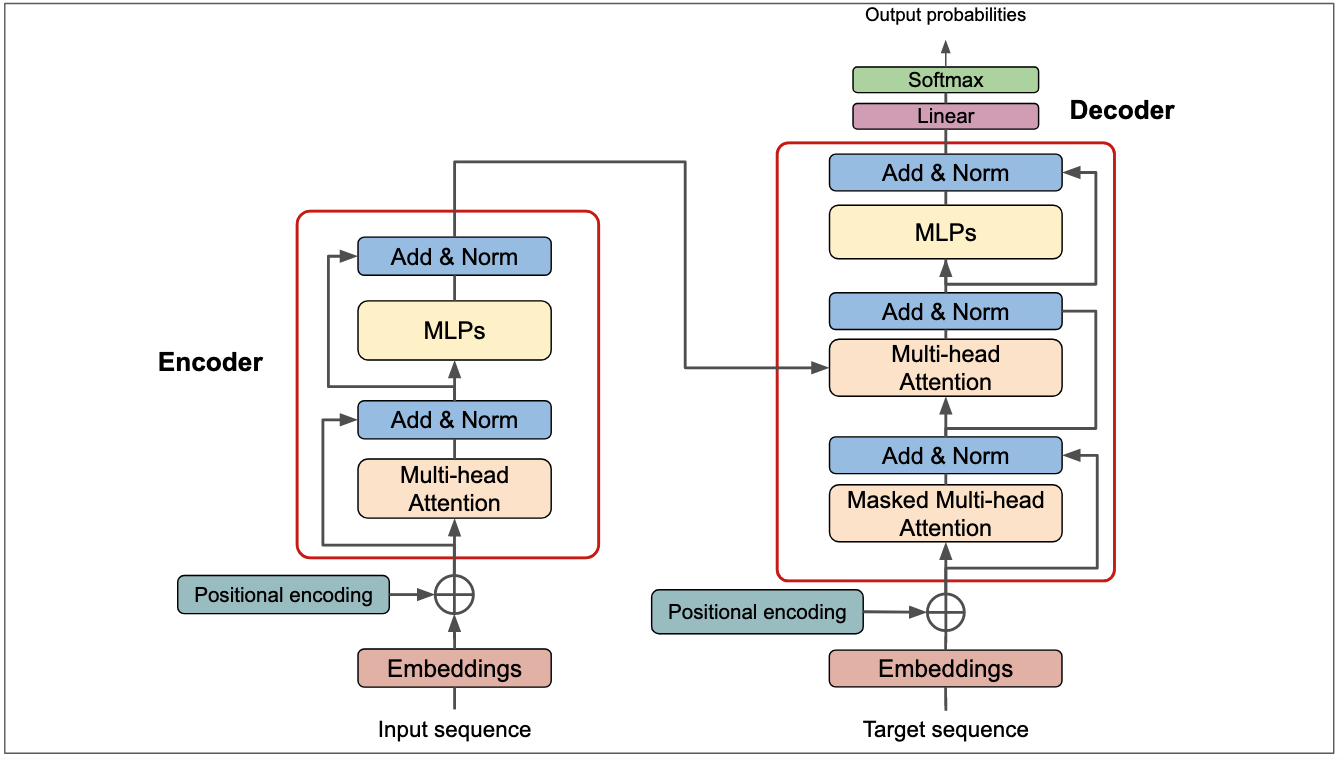

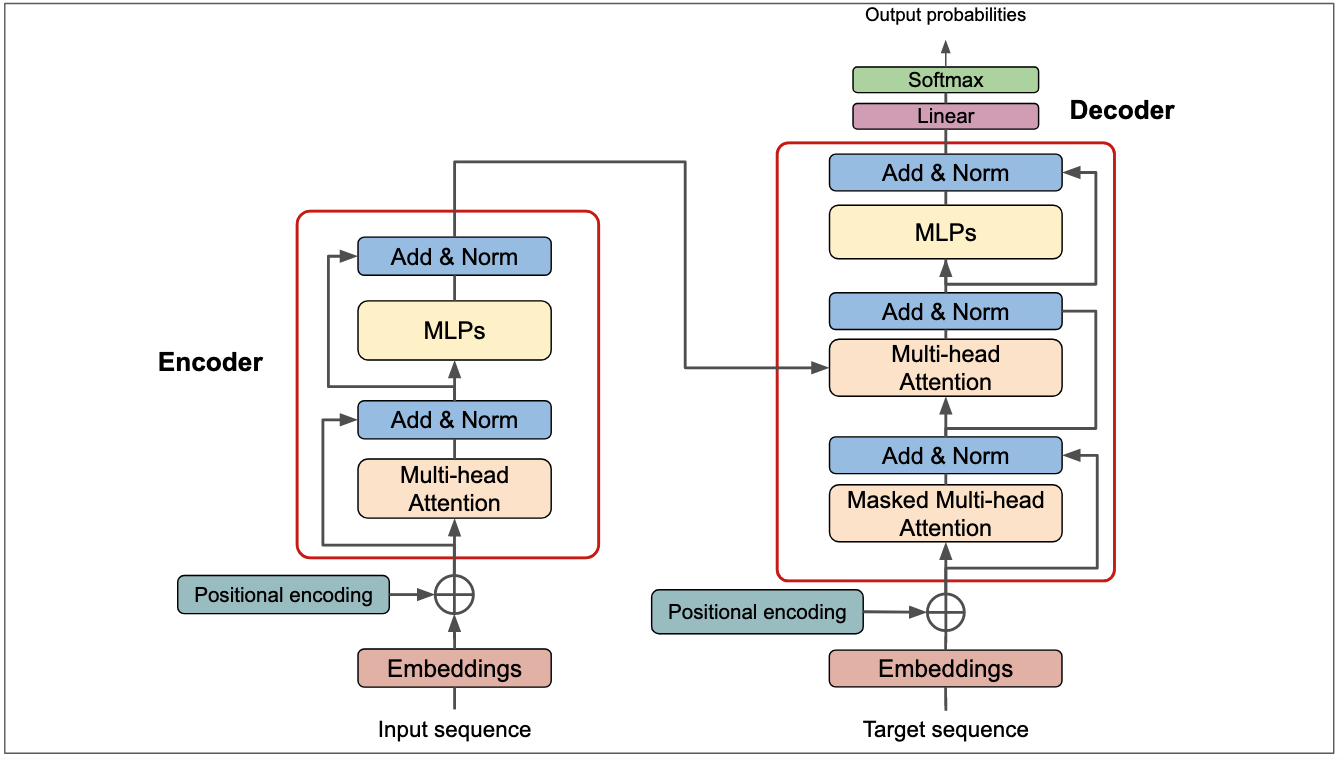

Learn about Large Language Models (LLMs), the Transformer architecture, tokeniza…

How I built a fun conference booth experience combining an open-source robot, vi…

Get a shared vocabulary of proven Transformation Patterns, common Anti-Patterns, and Paradigm Patterns to have more effective, data-driven conversations about your strategy and architecture.

For a personalized starting point, take our free online assessment. Your results will give you a detailed report on your current maturity and suggest the most relevant patterns to focus on first.

Every Tuesday, we deliver one short, powerful read on AI Native to help you lead better, adapt faster, and build smarter—based on decades of experience helping teams transform for real.