The Evolving Landscape of Large Language Model (LLM) Architectures

Discover the evolving landscape of Large Language Model (LLM) architectures and…

The Fortune headline landed like a punch to the gut of the market: "MIT report: 95% of generative AI pilots at companies are failing." The story spread like wildfire, fueling anxiety and affected stock prices of AI companies. Leaders, already under immense pressure to deliver on the promise of AI, are now questioning their investments or stop soon to be started initiatives.

If you are one of those leaders, acting on this headline alone would be a grave strategic error.

Let’s get this straight: the study is deeply flawed, the reporting is a huge misinterpretation, and the narrative it draws is dangerously misleading. The real story isn't about technology failure. It’s about organizational failure. And understanding that distinction is key to stay ahead of the AI Native wave that is coming.

Before you change your company’s AI strategy based on that headline, you should know what’s behind it. Or, more accurately, what isn’t.

Impossible to Find, Easy to Question The biggest red flag is the report itself. It is difficult to access, hidden behind a Google Form and even if you fill it out, there is a good chance you won’t get it. This isn't the behavior of a group confident in its findings, it looks more like research that can’t withstand open review. But with a bit of good old Googling here is the report.

The report's conclusions are built on a methodology that appears fundamentally flawed. Its claims, which supposedly erased billions from AI market values, originate from a tiny and uncontextualized sample of just 52 executive interviews. We are given no information about who these people were or the nature of their organizations, leaving no way to verify if they represent a meaningful cross-section of the industry. This is compounded by a odd benchmark for success, where a project was only considered successful if it generated a public release or SEC filing about its impact. This unrealistic standard ignores the vast majority of valuable internal work that doesn't receive public announcement. The study's data is also totally unexpected, with a claim that half of all GenAI spending is in sales and marketing, a figure that indicates the sample was narrowly focused on these areas rather than being representative of the broad, enterprise-wide adoption seen in credible analyses.

From an academic standpoint, this study lacks the necessary credibility and quality.

Ironically, the most valuable insight in the MIT report is the one the headlines completely ignored. Buried in the questionable data is a powerful confirmation of AI’s value: the rise of "shadow AI". Shadow AI is the same as shadow IT, it is when employees use unapproved tools, risking data breaches, compliance violations, and intellectual property leaks.

While the report claims official company initiatives are stalling, it also found that 90% of employees are regularly using LLMs on their own. They are using their own tools to work, solving their own problems, and generating value completely outside of the formal, top-down pilot programs.

This is the real story. It’s not that AI is failing. It's that organizations are failing to use it. The technology's value is so self-evident that employees are adopting it en masse, even when their companies won't. The structures in place are too slow, too rigid, and too disconnected from the workforce to capture the value individuals are already creating.

The report’s own data on adoption blockers confirms this. The highest-rated barriers to scaling AI weren't technical. They were organizational: "unwillingness to adopt new tools" (9/10), "challenging change management" (6.5/10), and "lack of executive sponsorship" (6.5/10). The technology works, the organization doesn't.

The MIT report mistook the symptoms for the disease. Based on our work with customers navigating this transition, the challenges are clear, and they are overwhelmingly human, not technical. Organizational factors account for the majority of obstacles, while technological issues represent only a small amount, a common pattern with any change.

It is a familiar list of challenges that apply to any new technology adoption, just as we observed during hundreds of Cloud Native Transformations at various enterprises. Go through the list and make sure you have an answer for each point. This will make success much more likely.

Successfully integrating AI is a business transformation, not a technology project. To ensure successful scaling and lasting change with AI, focus on solving a specific, high-value business problem. This requires building a strong data foundation and investing significantly in employee upskilling. By taking this approach, initial pilot programs can serve as valuable learning experiences, building momentum for systemic transformation.

The panic surrounding the MIT report is a distraction. It’s a convenient excuse to blame the technology for what are, fundamentally, failures of leadership, strategy, and organizational design.

Some failure in experimentation is healthy. If 100% of your AI pilots are succeeding, you aren’t being ambitious enough. You aren't pushing the boundaries of what is possible as we move from simple copilots that assist individuals to autonomous AI agents that can redesign entire business systems.

But the systemic, 95% "failure" rate described is not a sign of healthy experimentation. It is a symptom of a deep disconnect between technological potential and organizational readiness. Navigating these organizational complexities is the single biggest determinant of success. If you're ready to move beyond the headlines and build a resilient AI strategy that delivers real value, let's talk about how to overcome these common adoption challenges.

Contact us to overcome the typical issues with technology adoption in enterprises.

Discover the evolving landscape of Large Language Model (LLM) architectures and…

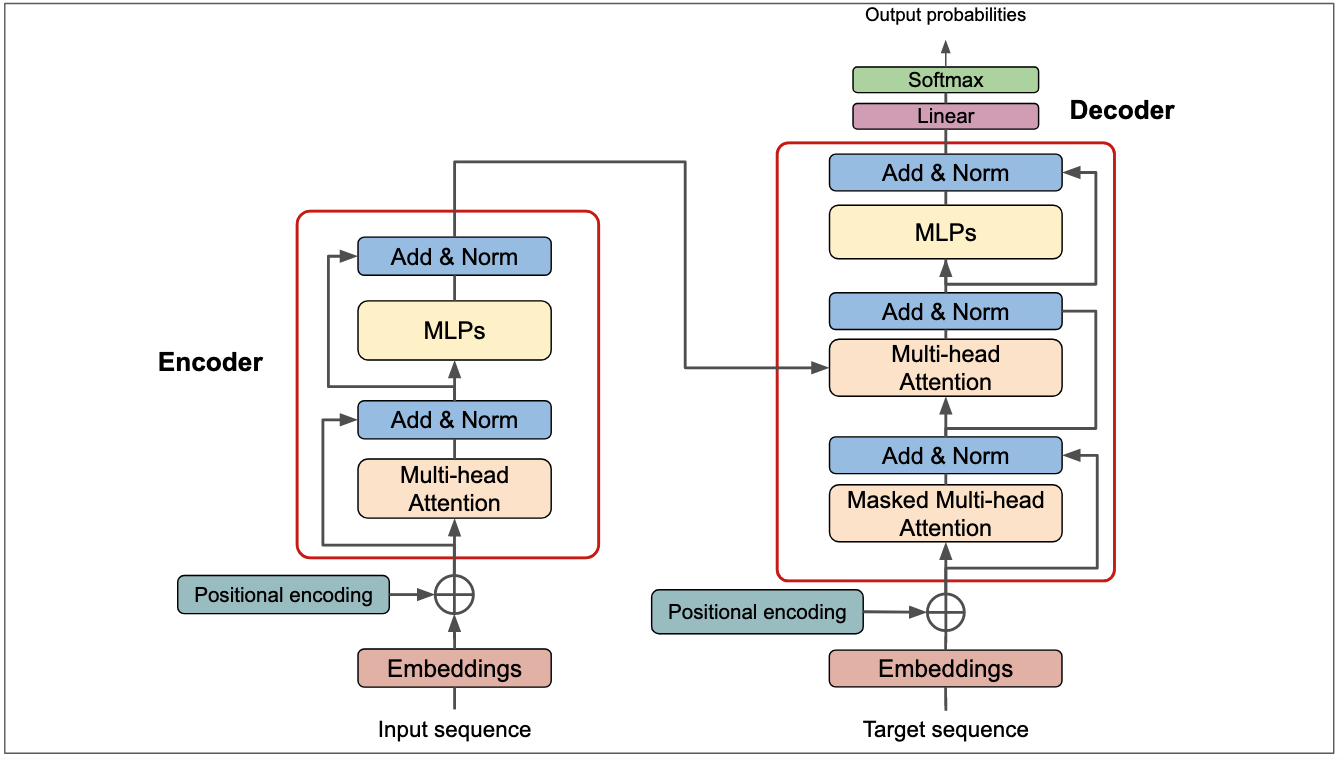

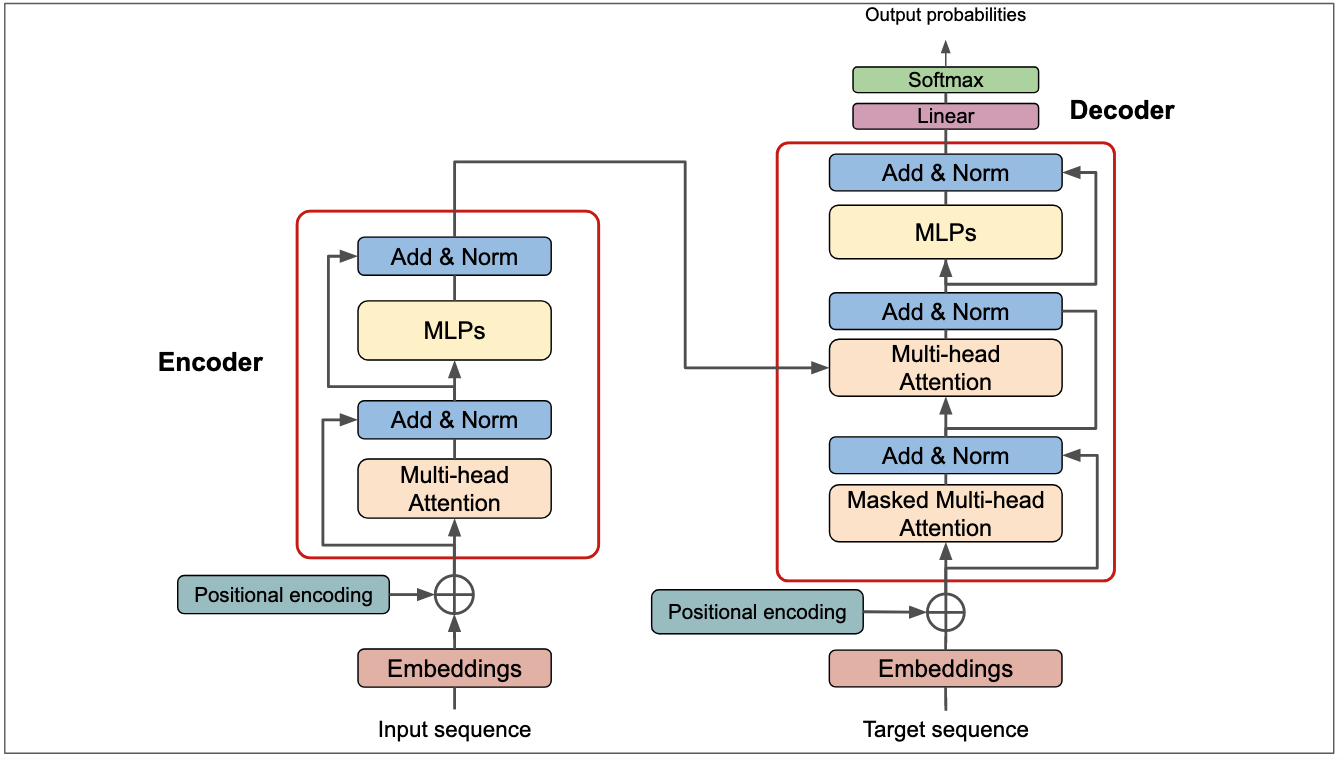

Learn about Large Language Models (LLMs), the Transformer architecture, tokeniza…

Discover how AI Engineering adapts Platform Engineering principles to meet AI ch…

Get a shared vocabulary of proven Transformation Patterns, common Anti-Patterns, and Paradigm Patterns to have more effective, data-driven conversations about your strategy and architecture.

For a personalized starting point, take our free online assessment. Your results will give you a detailed report on your current maturity and suggest the most relevant patterns to focus on first.

Every Tuesday, we deliver one short, powerful read on AI Native to help you lead better, adapt faster, and build smarter—based on decades of experience helping teams transform for real.