Waves of Innovation

Patterns

This is the complete, interactive library of the transformation and paradigm patterns from our book, "Waves of Innovation." It's a practical, hands-on tool designed to give you and your team a shared vocabulary for navigating the complexities of the AI Native wave.

A Pattern Language

for the AI Native Wave

This is the complete, interactive library of the transformation and paradigm patterns from our book, "Waves of Innovation." It's a practical, hands-on tool designed to give you and your team a shared vocabulary for navigating the complexities of the AI Native wave.

Why a Pattern Language?

To navigate complex shifts, you need a shared vocabulary. A "pattern language", an idea from architect Christopher Alexander, is a collection of proven, reusable solutions to common challenges. As he argued, we can only consciously choose what we can name and discuss. These patterns are that language, helping you make better design choices and lead richer, more collaborative discussions with your team.

Three Ways to Put the Patterns into Practice

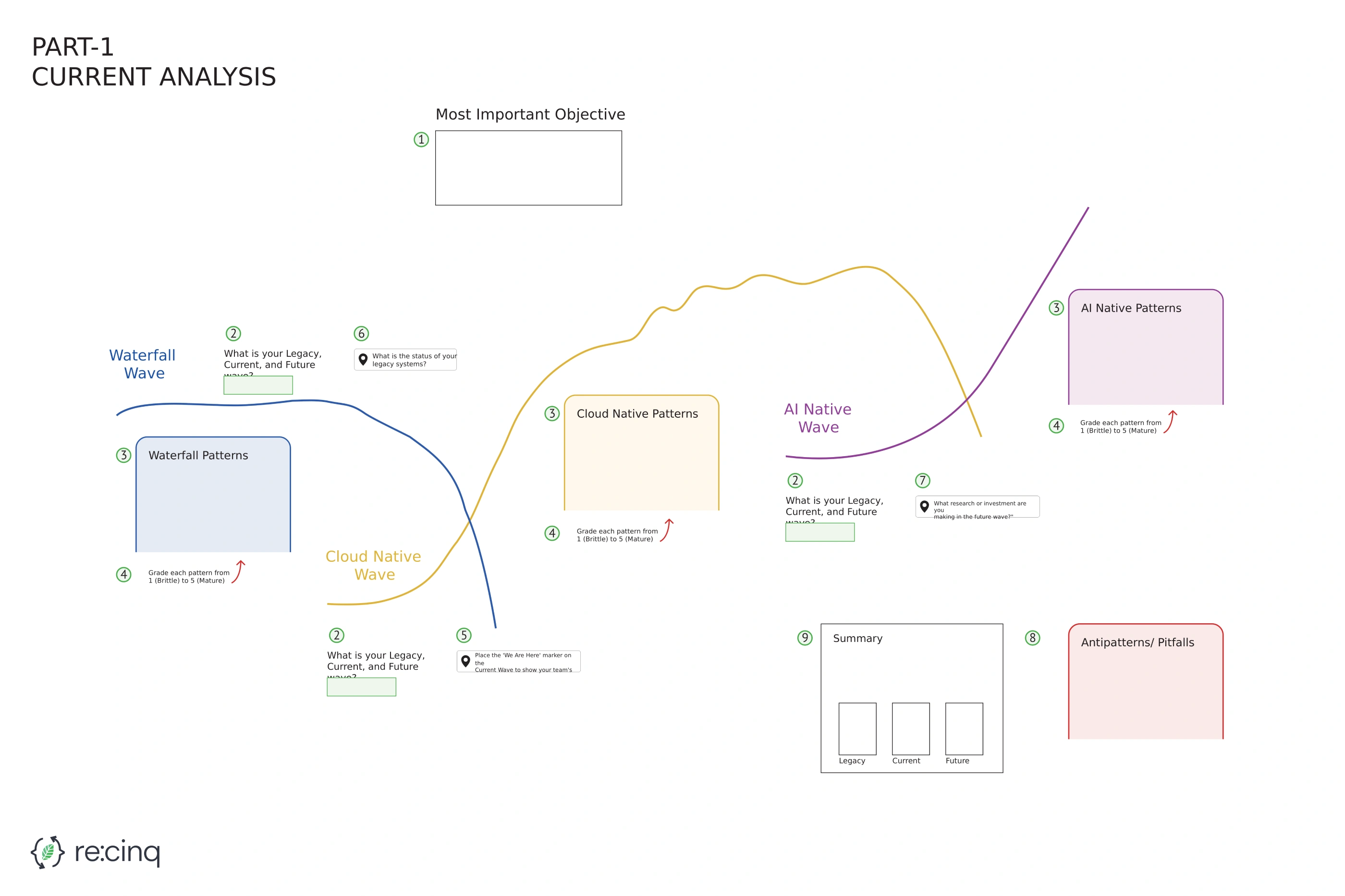

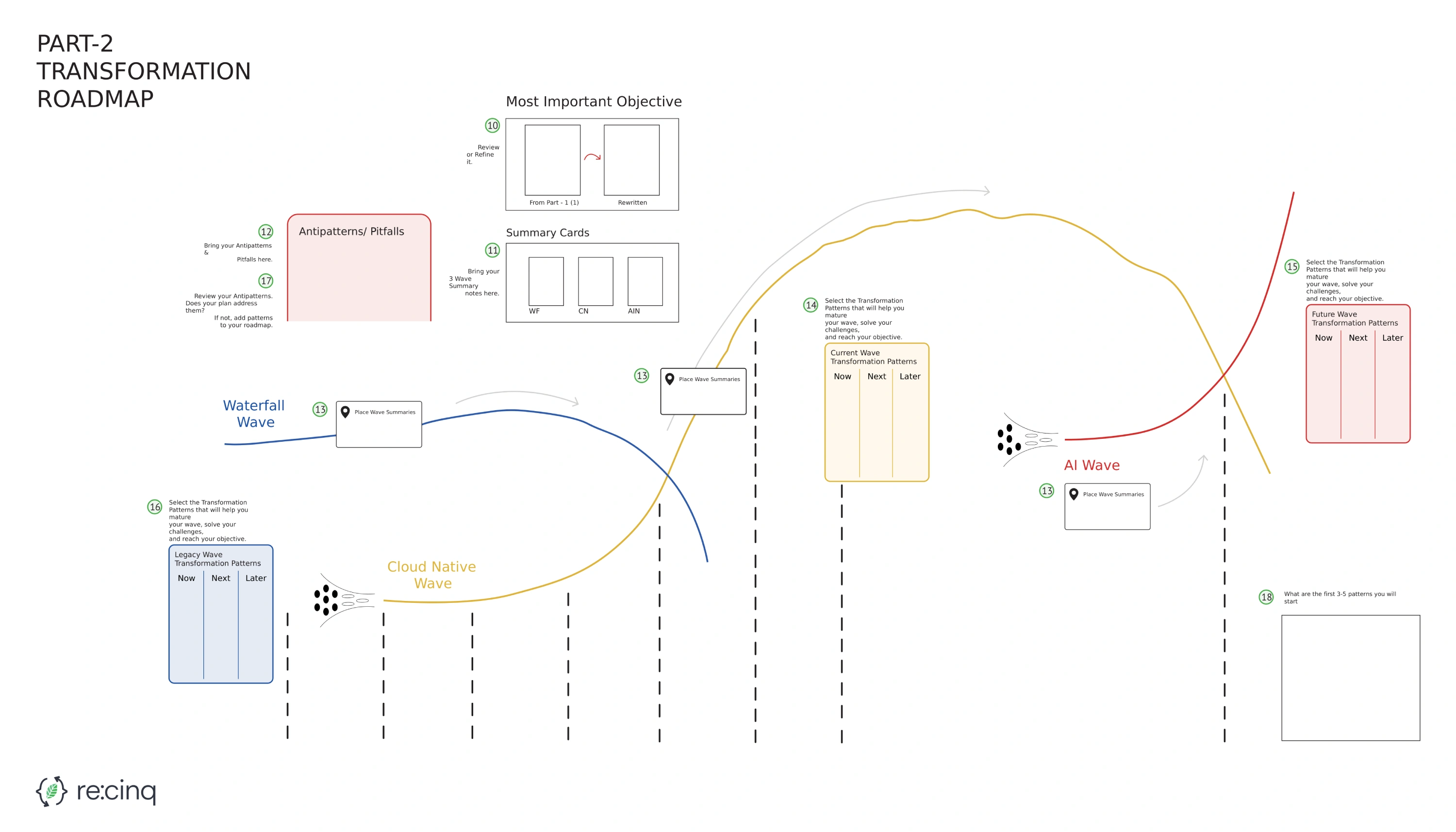

A Self-Facilitated Workshop Guide

Run a powerful, self-facilitated strategy session with your team. This free toolkit provides the instructions and posters you need to diagnose your current state and build an actionable roadmap using our pattern language.

The Instructions Guide

Your step-by-step playbook for facilitating the workshop. It provides detailed objectives, timelines, and expert tips to guide your team's conversation.

The Workshop Posters

Two large-format PDF posters (Part 1: Current State Analysis and Part 2: Transformation Planning) that act as the visual canvases for your workshop.

As Described In The Book

The complete theory, real-world case studies, and detailed descriptions of every pattern are found in our new book. Reading it is the best way to get the deep context needed to facilitate a powerful strategic conversation with your team. This entire ecosystem of tools begins with the book.

Bring the Patterns to Your Team with an Expert Guide

The self-service tools are powerful, but sometimes you need a dedicated expert. If you're facing a complex challenge and want to accelerate your progress, our facilitators can lead your team through a hands-on, highly-focused workshop to create a roadmap for your AI initiatives.