AI Platform Engineering Reference Architecture

Learn how to build a comprehensive AI platform architecture. From development to…

Book Now

Remember the Cloud Native hype? Enterprises struggling to "do" Kubernetes without being Cloud Native? Get ready for a rerun, but with higher stakes: AI. While you're still fumbling with Cloud Native, the AI Native wave is here, poised to transform everything. Organizations are scrambling to integrate AI, wrestling with concepts, practical use cases, and the fundamental shift in how we build and operate software systems.

The AI tooling ecosystem today resembles Cloud Native circa 2015 - immature, fragmented, but brimming with potential. Why are we fixated on AI when cloud costs remain high and internal development platforms are inefficient? We'll address this in our upcoming book, "From Cloud Native to AI Native," but for now, let's examine how to master Cloud Native before AI Native drowns you.

Learning from Cloud Native's journey is crucial. Past technology waves brought challenges, and those who adapted thrived. Today's Cloud Native ecosystem offers stable tools and well-documented practices, enabling speed and stability, which is the perfect foundation for an AI Native transformation.

Cloud Native is a fundamental shift in building and running applications, leveraging the cloud for speed, agility, stability, and resilience. It involves microservices, containers, orchestration, automation, continuous delivery, and a DevOps culture. Simply adopting these technologies doesn't make you Cloud Native if your organizational structure, processes, and culture haven't transformed. This is a transformational shift, essential for survival.

The lessons from many Cloud Native transformation are clear: transformational change goes beyond tools, big shifts build gradually, timing is everything, and focus on one major transformation at a time.

But here's the key insight: Cloud Native isn't just about running containers. It's about building adaptive, resilient systems that can evolve rapidly. These same principles are fundamental to AI Native systems, where models need continuous updates, data pipelines must scale dynamically, and infrastructure must handle unpredictable AI workloads.

We've identified six modes applicable to any technology adoption, especially relevant for organizations navigating the AI Native transformation:

Cloud Native has progressed through these modes: starting as pioneering, bridging to scaling, then optimizing, and innovating, while retiring old tech. Similarly, AI Native is already in Pioneering, Bootstrapping, and early Scaling phases. New tech waves don't replace old ones overnight; organizations often run multiple waves in parallel, requiring orchestration between Cloud Native infrastructure and AI Native capabilities.

Many organizations stumble in Cloud Native efforts due to common mistakes. A prime example is missing the transformative wave, like traditional banks delaying modernization while challenger banks exploited Cloud Native. This "cost of being too late" leads to lost market share and frantic catch-up efforts. Grassroots transformations often occur when leadership ignores new trends, leading to talent drain. These anti-patterns reveal deeper organizational dysfunctions.

The same patterns are emerging with AI Native adoption. Organizations are making the mistake of treating AI as just another tool to optimize costs, deploying off-the-shelf chatbots without changing underlying workflows. This approach misses the fundamental shift that AI Native represents: building systems that learn, adapt, and improve automatically.

AI Native isn't about adding AI features to existing applications - it's about fundamentally rethinking how systems are designed, built, and operated. An AI Native system has intelligence built into its core architecture, enabling continuous learning, autonomous decision-making, and adaptive behavior.

Key characteristics of AI Native systems include:

Think of how modern recommendation systems work - they don't just serve static content but continuously learn from user behavior to improve recommendations. AI Native extends this concept across entire technology stacks.

Traditional software development follows predictable patterns: requirements gathering, design, implementation, testing, deployment. AI Native development is fundamentally different. It's iterative, experimental, and driven by data rather than rigid specifications.

Key differences in AI Native development:

This shift requires new skills, tools, and organizational structures. Engineering teams need to understand machine learning pipelines, data scientists need to think about production systems, and operations teams need to manage model lifecycles.

Just as Cloud Native required new infrastructure patterns (containers, orchestration, service mesh, ...), AI Native demands its own architectural pattern built upon Cloud Native foundations. One of the most popular key pattern that enables AI Native systems is FTI Architecture, a unified architectural approach that separates machine learning workloads into three distinct, independently managed pipelines: Feature Pipeline, Training Pipeline, and Inference Pipeline.

FTI Architecture is the microservices pattern for AI systems. It applies Cloud Native principles specifically to machine learning workloads, providing the same benefits of separation of concerns, independent scaling, and fault isolation that made Cloud Native successful. This architectural pattern streamlines the development, deployment, and maintenance of machine learning models across their entire lifecycle.

Feature Pipeline: This stage deals with collecting, processing, and transforming raw data into usable features for AI models.

Training Pipeline: This is where AI models learn to perform their tasks, typically run offline in powerful compute environments.

Inference Pipeline: This is the real-time execution of trained models in production environments to generate predictions and drive actions.

Building on the FTI Architecture foundation, AI Native systems require specialized infrastructure layers that extend Cloud Native capabilities:

Model Management Layer:

Data Platform:

Compute Infrastructure:

Monitoring & Observability:

Development Tools:

AI Native is the next disruptive wave, fundamentally changing how we build software. Our experience shows organizations pushing "AI" for cost-cutting, like an off-the-shelf chatbot, without a corresponding shift in workflow or upskilling. AI Native is about building with AI at its core, enabling learning, adaptation, and automating operations. GenAI is driving excitement, but the future of AI Native is still forming.

The pioneering imperative is crucial: don't wait. Small, autonomous teams should explore AI, run experiments, and upskill the workforce now. Consistent, deliberate effort through ongoing Pioneering builds organizational muscle memory, preparing for breakthroughs. Start Pioneering AI now; look for early wins to Bootstrap and Bridge-Build.

Organizations that successfully adopt FTI Architecture gain significant advantages in their AI Native transformation:

Independent Pipeline Scaling: Just as Cloud Native microservices enabled independent team ownership, FTI Architecture allows specialized teams to own Feature Pipeline, Training Pipeline, and Inference Pipeline operations separately. This reduces coordination overhead and enables faster iteration cycles.

Technology Flexibility per Pipeline: Each pipeline can use technologies optimized for its specific workload patterns. Feature Pipeline might leverage Apache Spark for large-scale data processing, Training Pipeline might use PyTorch with CUDA for model development, and Inference Pipeline might use TensorFlow for optimized edge deployment.

Fault Isolation Across Pipelines: Problems in one pipeline don't cascade to others. A Training Pipeline failure doesn't impact real-time Inference Pipeline operations, and Feature Pipeline changes can be tested independently before affecting model performance.

Resource Optimization by Workload: Organizations can right-size infrastructure for each pipeline type. GPU clusters scale up during Training Pipeline cycles, Feature Pipeline maintains steady-state processing capacity, and Inference Pipeline auto-scales with user demand patterns.

Governance and Compliance Boundaries: FTI separation enables granular security controls and audit trails. Different compliance requirements can be applied to Feature Pipeline data processing, Training Pipeline model development, and Inference Pipeline production serving without affecting the entire system.

Organizations successful in AI Native transformation follow a predictable pattern:

AI-enabled systems add AI features to existing applications - like adding a chatbot to a traditional website. AI Native systems are built from the ground up with AI as a core architectural component. They learn continuously, adapt autonomously, and make intelligent decisions throughout the system, not just in specific features.

The transformation timeline varies significantly based on your starting point. Organizations with mature Cloud Native practices can begin AI Native transformation in 6-12 months for initial use cases. Full organizational transformation typically takes 2-3 years. The key is starting with pilot projects while building foundational capabilities.

AI Native teams need a blend of traditional software engineering and new AI-specific skills:

Absolutely. Small organizations often move faster than large enterprises. Start with:

The main risks include:

Cloud Native provides the perfect foundation for AI Native. Your existing container orchestration, microservices architecture, and DevOps practices directly support AI workloads.

Cloud Native Foundation Benefits:

AI Native Extensions:

The investment in Cloud Native infrastructure, team skills, and operational practices accelerates AI Native adoption rather than creating additional technical debt.

FTI Architecture is the definitive architectural pattern for AI Native systems. It separates machine learning workloads into three distinct, independently managed pipelines that together form a complete ML lifecycle:

Feature Pipeline: Transforms raw data into ML-ready features with consistent, reusable processing logic

Training Pipeline: Builds and updates models in isolated, resource-optimized batch environments

Inference Pipeline: Serves predictions with high availability and performance optimization in production

This architectural separation provides the same benefits as Cloud Native microservices: independent scaling, fault isolation, technology flexibility, and team autonomy. Organizations using FTI Architecture can iterate faster, scale more efficiently, and maintain higher system reliability than monolithic AI systems. FTI Architecture is to AI Native what microservices are to Cloud Native - the foundational pattern that enables everything else.

Key Takeaways: AI Native is imminent; pioneer continuously; learn from Cloud Native; adaptability is key; focus on value, not just automation. Getting Cloud Native right creates a strong platform for AI Native. Entering Pioneering mode now will allow your organization to capitalize on new technology as it's released.

The organizations that master this transition will build systems that don't just use AI - they think, learn, and evolve. The question isn't whether AI Native will transform your industry, but whether you'll lead that transformation or be left behind by it.

Learn how to build a comprehensive AI platform architecture. From development to…

Maximize ROI in platform engineering by treating platforms as products. Discover…

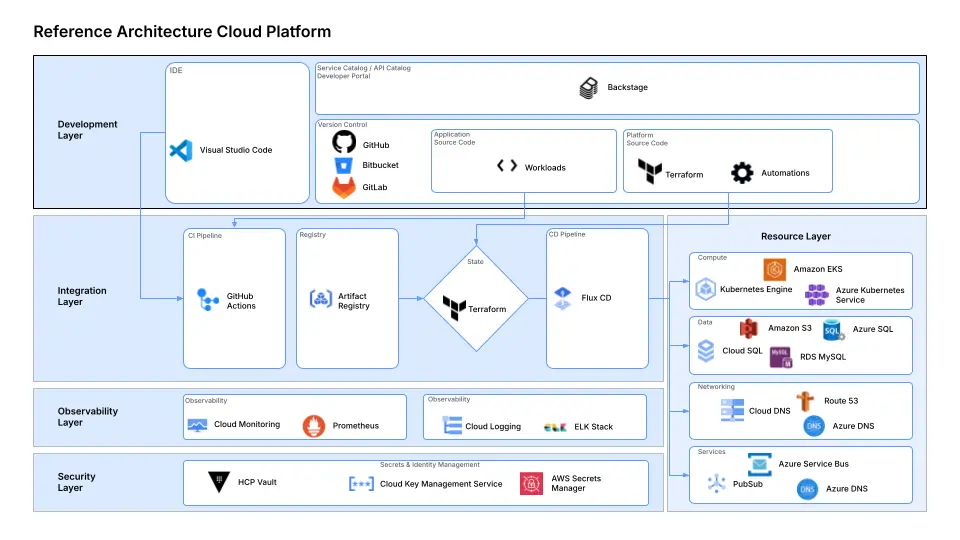

Build scalable platforms with Platform Engineering Reference Architecture. Empow…

Get a shared vocabulary of proven Transformation Patterns, common Anti-Patterns, and Paradigm Patterns to have more effective, data-driven conversations about your strategy and architecture.

For a personalized starting point, take our free online assessment. Your results will give you a detailed report on your current maturity and suggest the most relevant patterns to focus on first.

Every Tuesday, we deliver one short, powerful read on AI Native to help you lead better, adapt faster, and build smarter—based on decades of experience helping teams transform for real.