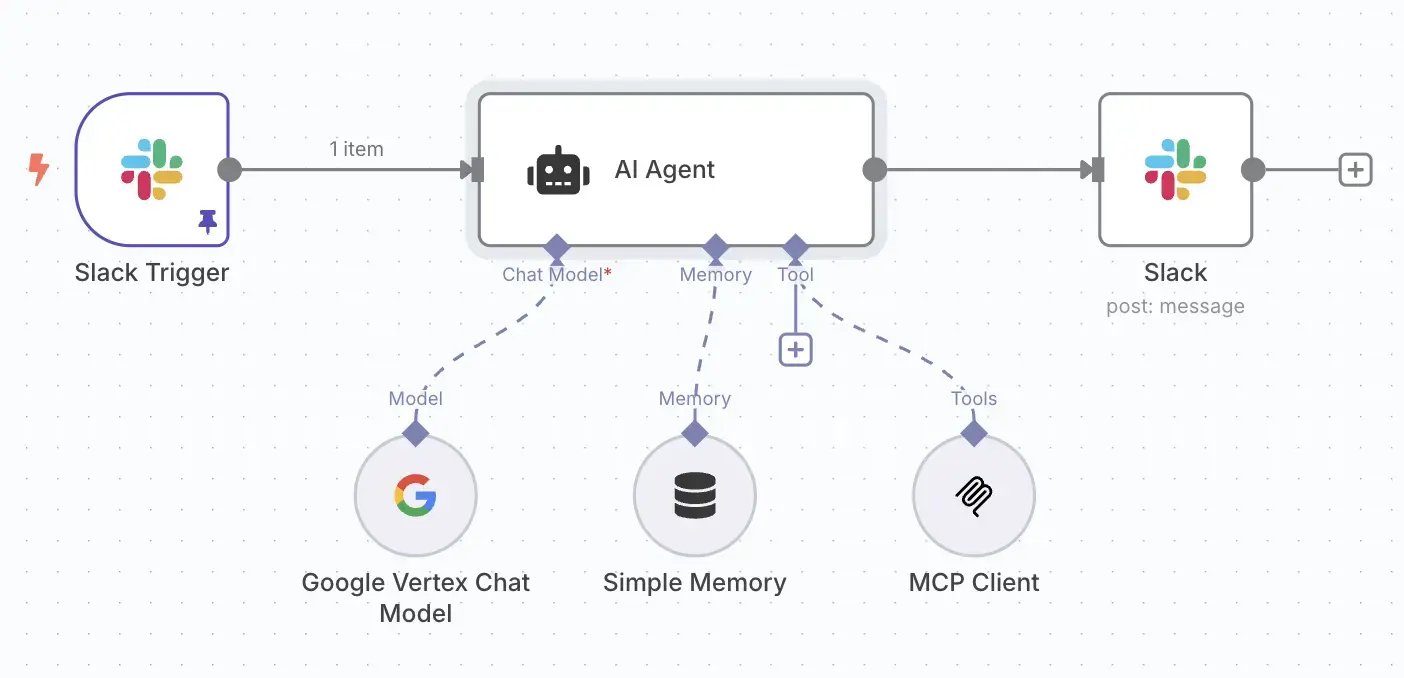

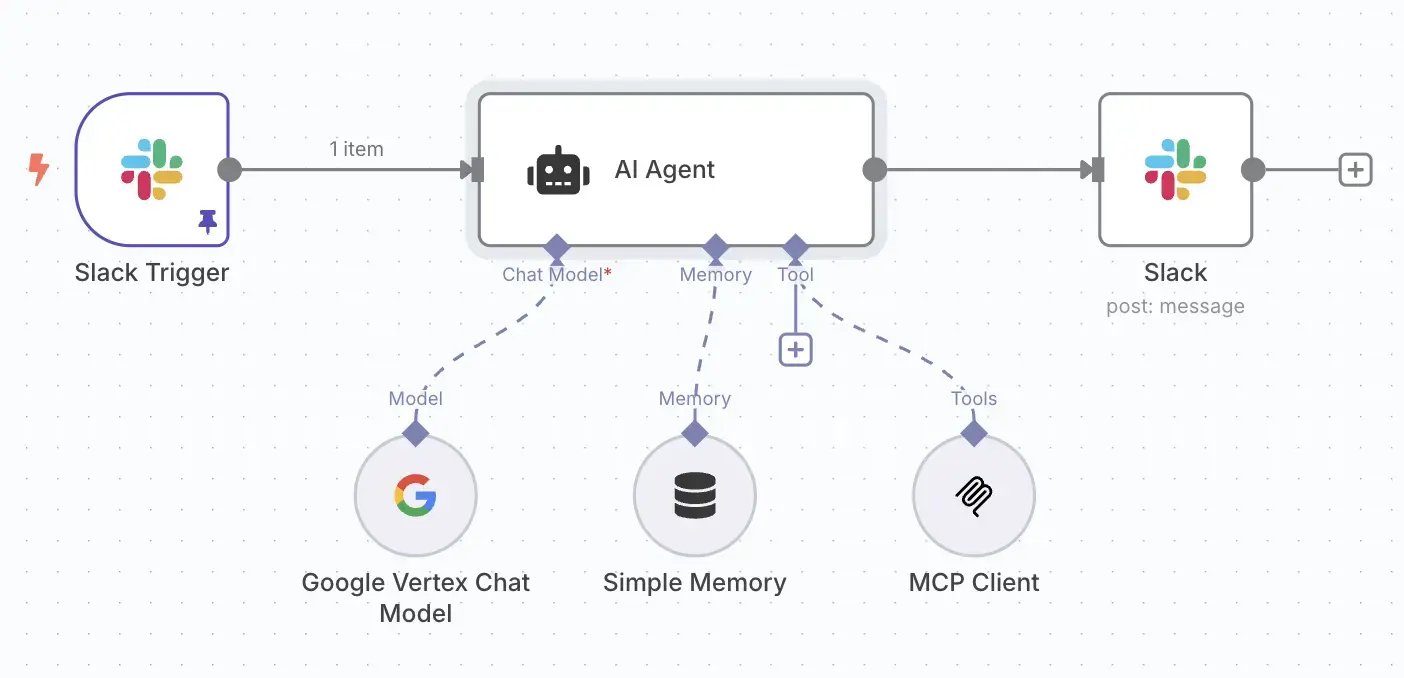

A Deep Dive into Building a Slack-to-Atlassian AI Chatbot with n8n, MCP, and Kubernetes

A Deep Dive into Building a Slack-to-Atlassian AI Chatbot with n8n, MCP, and Kub…

Book Now

The landscape of artificial intelligence is undergoing a massive change. AI agents, once largely passive assistants or "copilots," are rapidly evolving into proactive, autonomous entities capable of executing context-aware decisions and complex tasks.1 This surge in capability and complexity brings with it a fundamental requirement: for these agents to interact effectively, not only with external systems and data sources but, increasingly, with each other.2, 3 As AI models become more powerful and the tasks they undertake grow in complexity, the limitations of a single, monolithic agent become apparent.3 Specialisation is emerging as a key trend, where different agents possess unique skills and knowledge. Such specialisation, however, necessitates collaboration, and meaningful collaboration relies on robust, well-defined communication the same way we know from microservices. It is in this context that the architectural foundations of traditional integration and API interaction models begin to show their limitations, particularly when dealing with agents that can reason, plan, and act with a degree of independence.1

Without standardised communication frameworks, AI agents risk operating in silos. This fragmentation leads to significant inefficiencies, heightened integration complexity, and a fundamental inability to perform sophisticated, multi-step operations that require coordinated effort.2, 4 A particularly pressing challenge is enabling AI agents developed by different vendors, or using to find common ground and work together seamlessly.2, 3, 5

To address these challenges, several protocols and communication paradigms have emerged, each playing a distinct role in the evolving AI ecosystem. This series of articles will explore three such pillars. In this first part, we dive into:

Subsequent articles will explore Agent Communication Protocols (ACPs) and the more recent Agent2Agent (A2A) Protocol.

This series aim to describe the different protocols and to provide a clear understanding of what each protocol or paradigm entails, its common applications, and, crucially, how they relate to each other form the communication backbone of increasingly sophisticated and collaborative AI systems.

This paradigm shift and the engineering of AI Native systems—focusing on scalability, adaptability, and trustworthiness—are explored weekly in our newsletter, Waves of Innovation.

The Model Context Protocol (MCP) has rapidly emerged as a open standard, originally developed by Anthropic.7, 8, 9 Its primary function is to standardise the way AI applications, including programmatic agents, connect to and interact with external tools, data sources, and services.1, 6, 7, 8, 9, 10, 11, 12 The analogy of MCP as a "USB-C port for AI" 8, 9, 13 aptly captures its ambition: to offer a uniform method for AI systems to plug into various external capabilities, much like USB-C simplifies device connectivity. This approach obviates the need for bespoke, custom integrations for each new tool or data source an AI model might need to access, thereby significantly reducing development overhead and complexity.4, 8, 9, 12, 13 It is important to note that MCP is not designed to replace existing protocols like REST or GraphQL; rather, it operates as a distinct layer above them, providing an abstraction that unifies these underlying interfaces for AI consumption.1

The fundamental aim of MCP is to address the persistent challenge of efficiently connecting powerful AI models with external data sources and tools they require to perform effectively in real-world scenarios.4, 9 By establishing a common interaction pattern, MCP empowers AI applications to dynamically discover the tools available to them, inspect their functionalities, and invoke them as needed.1, 8, 9 This protocol facilitates robust two-way communication, enabling AI models not only to pull data from external systems (such as checking a calendar or retrieving flight information) but also to trigger actions within those systems (like rescheduling meetings or sending emails).8, 12

MCP operates on a client-server architecture, designed to be lightweight yet powerful.

Architecture:

Primitives: MCP organises interactions around three core primitives, providing a structured way for AI models to access and utilise external context.9

Interaction Flow: The communication between an MCP client (acting on behalf of an AI model) and an MCP server typically follows a sequence of steps, leveraging the JSON-RPC 2.0 protocol for structured message exchange.12, 13

initialize message is exchanged to handshake protocol versions and server capabilities.13tools/list method call.13 The server responds with a list of available capabilities, including their descriptions and input schemas.tools/call method, providing the tool name and necessary arguments.13Security: MCP is designed with security in mind, often adopting a "local-first" approach by default, where servers run locally unless explicitly permitted for remote use.9 Explicit user approval is typically required for each tool or resource access, ensuring user control over data and actions.9 Authentication credentials for MCP servers can be managed securely, for instance, through environment variables passed to the server process.10 Some MCP clients, implement features where the user must explicitly approve a tool's use by the AI agent.10

MCP was initiated by Anthropic 7, 8, 9 and has quickly gained traction, with support and implementations emerging from various organisations and the open-source community. Microsoft has integrated MCP with its Azure AI Agent Service 15, and coding assistants like Cursor or GitHub Copilot utilise MCP extensively.10 The proliferation of community-developed MCP servers for diverse tools and services further underscores its growing adoption.6, 7, 11

This rapid development and diverse adoption of MCP servers by numerous entities point towards a strong industry consensus on the necessity of such a standard. The core problem that MCP aims to solve is the one-off integrations for AI models 4, 7, 9, which is a widespread and significant pain point for developers and organisations. An open protocol like MCP 7, 8 is attractive because it promises enhanced interoperability and a reduction in duplicated development effort. The sheer variety of example servers, ranging from general-purpose utilities like executing Python code 6 to specific enterprise tools like Stripe or GitHub integrations 11, demonstrates MCP's adaptability across many different domains.

Furthermore, MCP represents a fundamental shift in how AI models operate. Instead of being isolated "brains" relying solely on their pre-existing training data, AI models are becoming interconnected "hubs" capable of actively leveraging a vast array of external capabilities. MCP's primary function is to facilitate this connection between LLMs and external tools and data sources.6, 9 This fundamentally alters their operational paradigm, allowing them to interact with and utilise external systems in real-time.12 This ability to access and act upon current, external context makes them significantly more powerful and applicable to a much broader range of real-world tasks and challenges.

Want more like this? Further insights into AI Native systems, architecture, and strategy are provided weekly in our newsletter, [Waves of Innovation](https://re-cinq.com/monthly-report.

The Model Context Protocol, then, serves as a crucial bridge, enabling AI agents and applications to reach beyond their inherent knowledge and interact dynamically with the vast world of external tools and data. By standardising this connectivity, MCP not only simplifies development and enhances interoperability but also empowers AI to perform more complex, context-aware tasks. However, connecting to tools is just one facet of the broader AI communication challenge.

Continue reading:

A Deep Dive into Building a Slack-to-Atlassian AI Chatbot with n8n, MCP, and Kub…

Agents in Dialogue Part 2 of 3: ACPs - The Foundational Languages of Agent Colla…

Agents in Dialogue Part 3 of 3: A2A Protocol - Orchestrating Modern AI Agent Tea…

Get a shared vocabulary of proven Transformation Patterns, common Anti-Patterns, and Paradigm Patterns to have more effective, data-driven conversations about your strategy and architecture.

For a personalized starting point, take our free online assessment. Your results will give you a detailed report on your current maturity and suggest the most relevant patterns to focus on first.

Every Tuesday, we deliver one short, powerful read on AI Native to help you lead better, adapt faster, and build smarter—based on decades of experience helping teams transform for real.